Thank you! Now fixed…

Awesome, Shane!

This is very, very useful.

Thanks for all that you contribute so freely to the AppleScript community.

Shane, thanks again. This is looking really great!

One question has occurred to me:

Can the searches be limited to only the immediate folder (does NOT include sub-folders), like the Bash find command?

-- This will return a list of POSIX paths for "theFolder" and ALL sub-folders.

set theFolder to choose folder ## path to desktop

set txtFileList to mdLib's searchFolders:{theFolder} searchString:"kMDItemFSName ENDSWITH[c] %@" searchArgs:{".txt"}

### Can the search be limited to ONLY "theFolder" (no sub-folders),

# like the below Bash find command:

(*

Use the -maxdepth 1 option to limit to make non-recursive as in:

find ~/Documents/Test -maxdepth 1 -name "*.plist"

*)

No – you’d need to filter the results separately.

At the risk of repetition, let me add that using mdfind like that is convenient, it’s also slow for this sort of thing. If I run these two scripts with my messy desktop:

paragraphs of (do shell script "find ~/Desktop -maxdepth 1 -name '*.txt'")

and:

use AppleScript version "2.4" -- Yosemite (10.10) or later

use framework "Foundation"

use scripting additions

set theFolder to "~/Desktop"

set theFolder to current application's NSString's stringWithString:theFolder

set {theFiles, theError} to current application's NSFileManager's defaultManager()'s contentsOfDirectoryAtPath:(theFolder's stringByExpandingTildeInPath()) |error|:(reference)

set theFiles to (theFiles's pathsMatchingExtensions:{"txt"}) as list

The difference in time taken is more than an order of magnitude. And like mdfind the latter not relying on Spotlight indexing, so it’s potentially more robust than using Metadata Lib.

(Of course mdfind can do more complex finds that take more effort in ASObjC, in which case the difference might disappear.)

It is very interesting that you just posted a script using the Bash find command!

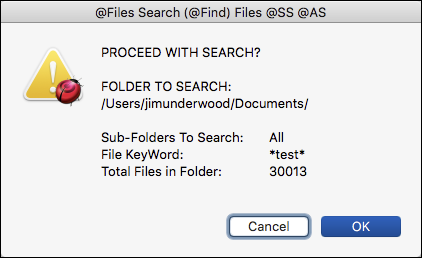

I have just finished building a full script doing the same, but with extensive UI to allow the user to set:

- Parent search folder

- Number of sub-folder levels to include

- File name keyword

It works very fast. In a folder with 30,001 files,

it took only:

TIME TO SEARCH for & Return Paths for 31 Files

STOP:

• Friday, August 4, 2017 at 10:06:27 PM

• EXECUTION TIME: 0.136 sec

When I get it cleaned-up, I’ll post.

Only to show an example of where it takes 10 times as long to run.

find is one of my favourite tools. If I really need to be sure that something does or (more likely) doesn’t exist, find is the tool for the job as it actually traverses the entire tree of whatever parent directory you give it.

However, for that very reason, the price of find's thoroughness is its speed.

It’s never going to be faster than a metadata search, which is designed to have exactly the opposite attributes.

However, I have NOT seen a material difference in speed when using find.

As I reported above, find searched 30,000 files to find matches in 31, and it took only 0.136 sec.

I find that very acceptable.

Although that’s true, my 10x comparison was actually with using file manager. This was for using find with -maxdepth 1, which I’d argue doesn’t make much sense.

That’s an important point. As someone who has had more than my share of Spotlight-related problems over the years, the idea of relying on a completely accurate metadata index scares me. It’s a best-effort system at best, it seems to me.

I see more value in non-critical areas, especially getting metadata related to particular file types — for example, the dimensions of graphic files, page numbers of PDF files, duration of sound files, etc. That’s where it can be a big time-saver.

When you quote relative performance, I believe it is important to also provide the absolute performance in order to see the complete picture. While the ASObjC script was 10X faster than the find shell script, the actual times, per Script Geek (10 trials)

- ASObjC: 0.001 sec

-

find: 0.009

From an end-users perspective, 0.008 sec is nothing, even if it is 10X.

Even when I use find to search 30,000 files for text within the name, the performance is very, very acceptable at 0.136 sec. I haven’t tested it, but even if you assume a ASObjC equivalent is 10X with 0.014 sec, again, that is not material to the end user.

I have a lot of tools in my toolkit, and I try to select the one that is best for the job. Sometimes the simpler tool, although a bit slower, is the best for the job. Sometimes, if I need to process 100s of thousands (very very rare) of items, then the more powerful, but more complex, tool is the best tool for the job.

Sorry Shane, but I have to disagree. There are many times I only want the files, or folders, in the parent folder. There are a number of ways to get this, but using find is a valid, viable option, especially if I can easily change the find statement to handle a variety of searches. I have already shown that the performance is very, very acceptable. As an example:

set cmdStr to "find \"" & folderToSearch & ¬

"\" " & subFoldersToSearch & ¬

" -iname \"" & ptyKeyWord & "\""

set filePathList to paragraphs of (do shell script cmdStr)

Shane, I think it may be hard for you to fully appreciate that because you understand ASObjC and ObjC so well, it is near second nature to you. So I understand it being your preferred solution in almost all cases. But some us, at least for me, still find it a very complex and verbose language that has very little support, other than from you and a few others.

Whereas, Bash commands like find has lots support. It is easy to find solutions/examples on the internet, and the Bash documentation is complete and easy to understand.

As with anything, different users will prefer different tools, have different styles. So be it.

I do really appreciate all the help you give us with ASObjC, from examples, explanations, and the script libraries like mdLib. I would not have any hope of using or understanding ASObjC without this. Thanks again.

Oh, absolutely. But it doesn’t hurt to challenge preconceptions — I’m sure most people would assume that find was the fastest option in the above case. As for simplicity — libraries were invented for hiding complexity away.

My main issue with find, though is not speed but stuff like this:

paragraphs of (do shell script "find ~/Library/Script\\ Libraries -name '*.scpt'")

Oh, absolutely. One of the best and funniest quotes I heard years ago:

“Definition of an expert: A person with a closed mind.”

Well, I think you are the one making an assumption about what most people would assume. LOL

I really don’t know about what “most people” think these days – hard to tell.

But, applying a bit of experience and logic, it seems to me that since find is a Bash shell command, I would guess that many, if not “most”, Mac users either don’t have an opinion, or would think it is slower, because:

- Few Mac users (I’m guessing here) have little direct exposure to shell scripts.

- Almost every time someone posts a shell script in the AppleScript community forums, someone will be quick to point how out how slow shell scripts are.

But I would NOT bet the farm either way.

I learned many, many years ago, as a freshman in a university band, that it is dangerous to “assume” because it will “make an a$$ of U and me”. LOL

or so it seems to me.

You can have the last word on this, if you want it. I have nothing more of significance to add.

I don’t follow. What’s the objection to that?

The issue is that find doesn’t know ordinary directories from packages, so it walks the contents of the latter. Assuming you have multiple .scptd files there, you’ll get main.scpt appearing in the list multiple times. You then need some way of filtering them out.

Thanks for pointing this out. It resulted in increasing my knowledge about the very powerful, very flexible, find command.

It would seem there are two easy solutions to this:

-not -path '*.scptd*'

-maxdepth 1

Also, I learned that one can force find command to follow symlinks by use of the -H parameter. Excellent – I can process either way.

set cmdStr0 to "find -H ~/Library/Script\\ Libraries -name '*.scpt'"

set cmdStr1 to "find -H ~/Library/Script\\ Libraries -name '*.scpt' -not -path '*.scptd*'"

set cmdStr2 to "find -H ~/Library/Script\\ Libraries -maxdepth 1 -name '*.scpt'"

set fileList0 to paragraphs of (do shell script cmdStr0)

set fileList1 to paragraphs of (do shell script cmdStr1)

set fileList2 to paragraphs of (do shell script cmdStr2)

set counts to {¬

count of fileList0, count of fileList1, count of fileList2}

return counts

-->45, 32, 13

That will work in this specific case, but it’s not a general solution to the problem — you can’t apply it elsewhere.

That’s no solution — a user may have .scpt files organized in subfolders.

I don’t see why not. You can exclude any folder you like.

But you gave a very specific case, and I gave you a solution, that always works for searching for “scpt” files while excluding “scptd” packages.

That’s no solution — a user may have .scpt files organized in subfolders.

You may not like it, but it is a solution for searching the Script Libraries folder, where it is unlikely that I have script libraries in sub-folders.

What is your solution for searching only for “.scpt” files in the Script Libraries folder and all sub-folders?

Now that we know the find command will treat packages as regular folders, we can use the find tool appropriately, just like any other tool. No tool is perfect, or without its limitations. It is always best to know your tools before you use them, or be prepared for a lot of test and debug.

IAC, seems to me that most likely the user will be searching for other criteria as well, like characters in the file name.

But, again, the Bash find command is but one tool in my tool kit. I’ll use it when it is the most appropriate tool for the job, including my time. I still have other tools if I need them.

I think the limitations you pointed out will seldom occur in many use cases.

It is always best to know your tools before you use them

Exactly. I see lots of scripts built around tools like find that fail to account for the possibility of packages being treated as folders. It’s not that there aren’t ways around the problem — it’s that people overlook the limitation in the first place.