Just updated to Ventura 13.2.1 from Sierra 12.6. Now scripts that use Speech Recognition in Voice Control no longer work. For instance, I have a simple GUI script to press the Down Arrow a specified # of times.

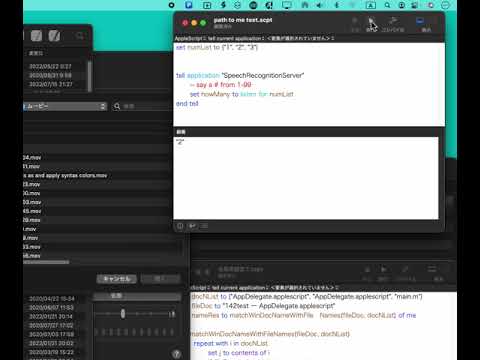

When I open the script for editing, the line that called Apple’s “SpeechRecognitionServer” is changed to “Apple Script Utility,” which apparently doesn’t have a “listen for” command, & the “listen for” command is changed to “«event sprcsrls»”; Apple Script Utility throws an error:

{<LIST OF #s 1-99>} doesn’t understand the “«event sprcsrls»” message.

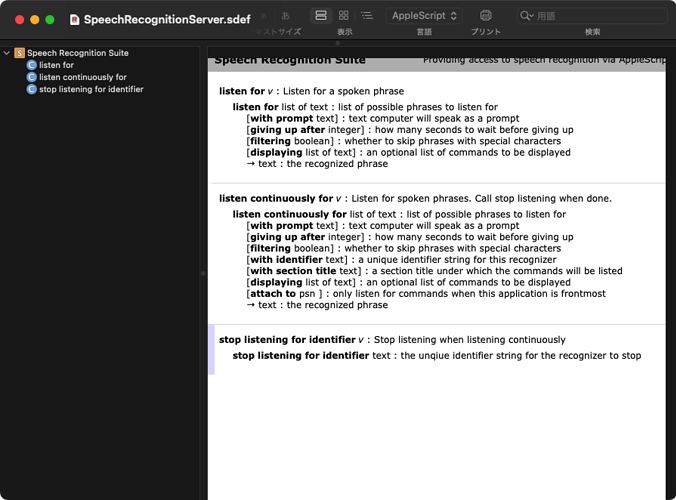

SpeechRecognitionServer is no longer listed in the Dictionary window.

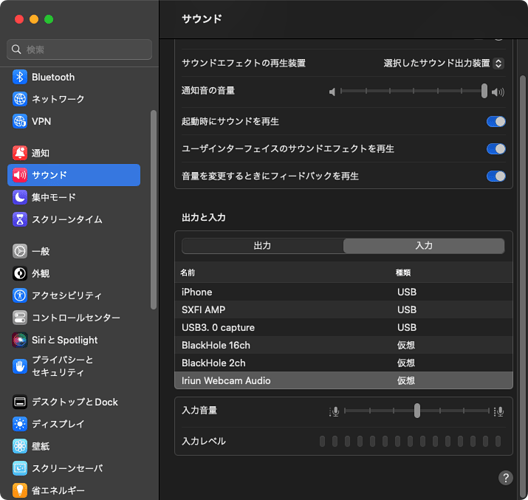

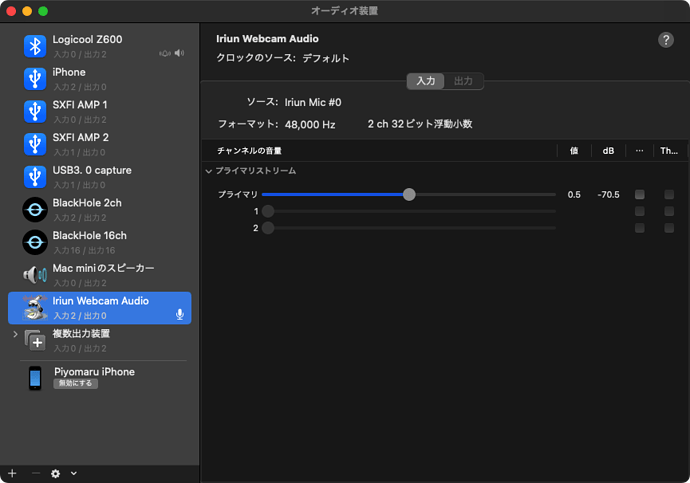

Apple still pays attention to Voice Control, in fact, they’ve upgraded it in Ventura, adding a Spelling Mode, so clearly there’s some part of the system that’s interpreting speech. Built-in commands with number input, like “Move forward < count > words” still work. My commands require a separate input to specify the # after activating because I don’t know a way to incorporate it w/other words in a command, but until Ventura, they were all working fine.

Does anyone know how to continue accessing SpeechRecognitionServer, or an alternate/replacement system utility that serves the same function?

Here’s the code for one of my custom commands:

global numList

set frontApp to (path to frontmost application as Unicode text)

set numList to {}

set numList to CreateNumList(numList)

--THE CODE IS SUPPOSED TO BE:

tell application "SpeechRecognitionServer"

-- say a # from 1-99

set howMany to listen for numList

end tell

--COMPILING CHANGES THE CODE TO:

(*

tell application "AppleScript Utility"

set howMany to «event sprcsrls» numList

end tell

*)

tell application "System Events"

tell application process frontApp

repeat with i from 1 to howMany

key code 125 --Up key

delay 0.02

end repeat

end tell

end tell

on CreateNumList(theNumbers as list)

repeat with i from 1 to 99

set end of theNumbers to i

end repeat

return theNumbers

end CreateNumList